Late last year, Joseph Franklin, a professor at Florida State University, came to a daunting conclusion : after exhaustively examining hundreds of suicide prediction studies he discovered that we had not made any progress in the last 50 years. Science could not predict suicide.

But, it seems, artificial intelligence has reasons that science still does not understand. In recent months, several projects are making great strides in predicting a really serious problem. According to the WHO, 800,000 people commit suicide every year and this is a ray of hope (algorithmic).

The algorithms that can predict the future

It is curious that the first major finding in predicting suicide comes from the same working group as Joseph Franklin. The second author of Franklin’s study, Jessica Ribeiro, is precisely the team leader who is developing a truly accurate algorithm : she is able to predict who will commit suicide in the next two years with 80 percent accuracy.

Although the article has not yet been published in Clinical Psychological Science, the data we have accessed shows that when the algorithm was used it focused on patients from general hospitals, the algorithm was able to have a precision of 92% to predict suicides at a week’s notice.

The results, in the absence of replicating and investigating them in depth, are extraordinary. Franklin’s team has needed more than 2,000,000 clinical records to train the algorithm in detecting suicide patterns. And, according to Ribeiro herself, this has only just begun.

Beyond the University

And, of course, one argues that, if a small group of researchers is able to do that with two million medical records, what would not be able to make a great social network that has not only many more profiles, but also Many more data?

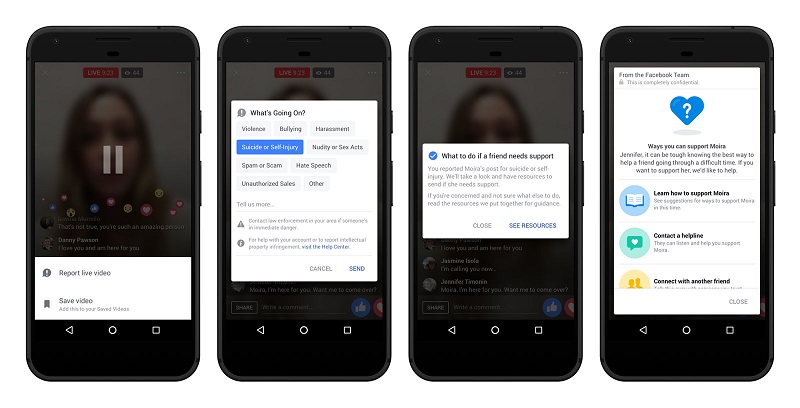

Katelyn Nicole Davis, the 12-year-old girl who relayed her suicide on the social network last year, has made that question on Facebook after the incident. And, in response, it has begun using artificial intelligence to identify members who may be at risk for suicide.

You may also like to read another article on improtecinc: Deep Web, Dark Web and Darknet: These are the differences

In fact, suicide prevention is the first major task of semantic content control with artificial intelligence that is taking place on Facebook. The tool is being tested in the United States and the algorithms are being developed with the help of experts and social organizations. As they explained to the BBC, “it’s not only useful, it’s critical.”

In essence, the algorithms recognize patterns in the content that users post and in the reactions of their contacts. To speak of sadness or pain in certain senses could be a sign. And, similarly, comments like “Are you okay?” Or “I’m worried about you” would be.

What to do?

Once the message is identified and a pattern is detected, it is sent to specialized equipment. Here ends the simple part: the serious problem is what to do. How can we intervene so that suicide does not happen?

We have talked about it on other occasions; privacy and health are two ethical principles that are in constant conflict. To what extent can we use the private data generated by a person to advise you of medical treatments? And even more, how can we use those predictions to intervene without losing information along the way?

It’s worth noticing that we are moving in a little studied field, but if these algorithms have a real impact on our digital life, we will have taken a giant step. And we are about to live one of the most important health debates of the future: how to protect health and privacy in a digitized world.

Tags: artificial intelligence

Leave a Reply